In the 1995 cult classic film Clueless there is a thirty second sequence that fashion fanatics still talk about to this day:

The idea of having a computer program that knows what’s in your closet and that can help you experiment with different combinations of garments has really captured people’s imagination.

If you look online, you’ll find thousands of comments that ask the same question: why isn’t this real yet?

There are already apps out there that allow you to digitize your closet and that can give you recommendations on what to wear, but they all lack the most magical feature of the Clueless closet: showing you what you would look like wearing the clothes.

With the rise of generative diffusion-based tools, we thought now might be the right time to check if the Clueless closet can be brought to life or not.

Exploration

Gathering data

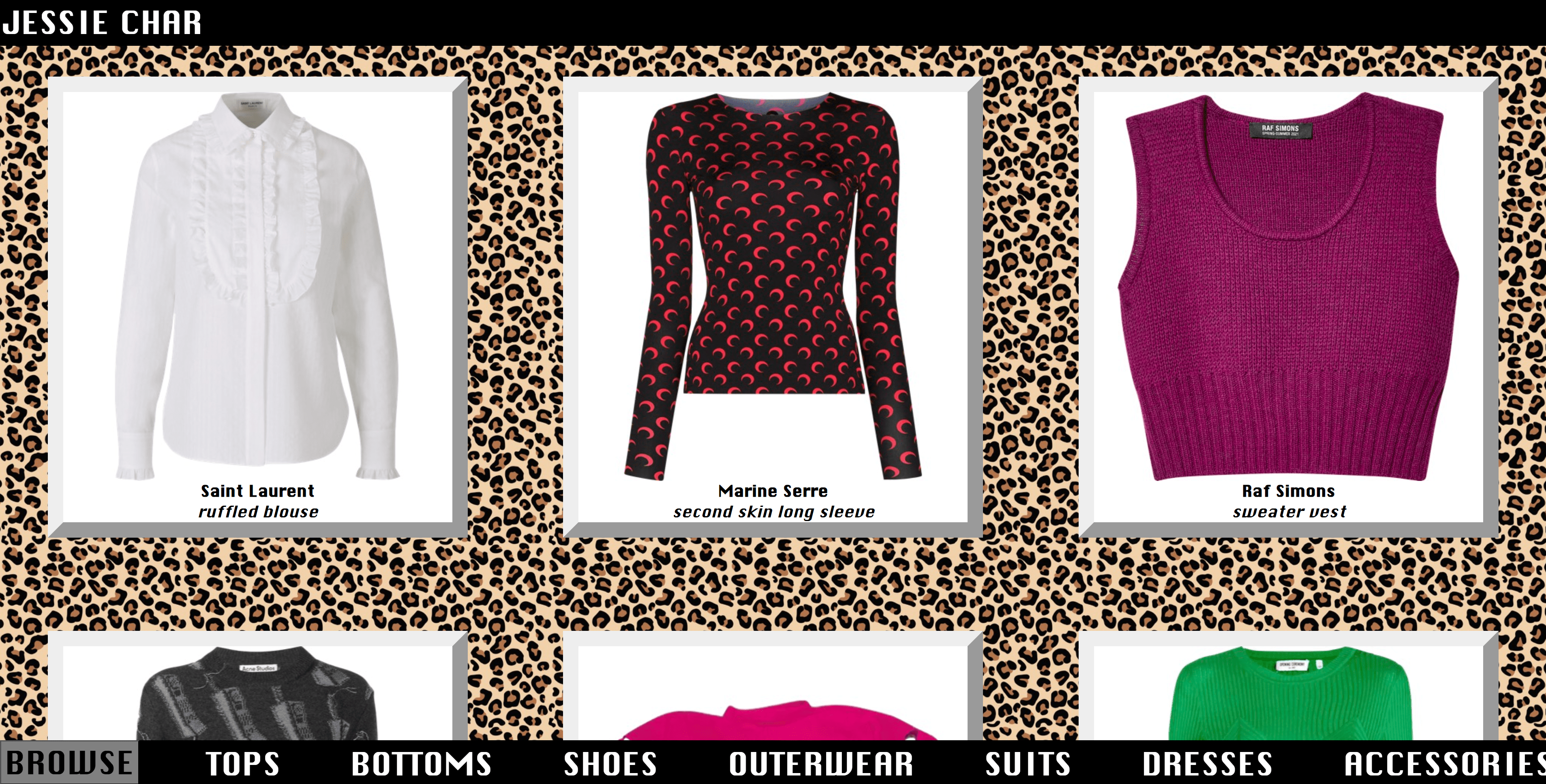

The first thing we needed was access to images of a wide variety of garments. Sadly, no one in the Spatial Commerce team is particularly fashionable, which is why we partnered with Jessie Char and Maxwell Neely-Cohen, who have both cataloged and digitized their entire closets and created their own unique versions of the Clueless closet.

Training models for garments

In a previous project we used DreamBooth to fine-tune Stable Diffusion 1.4 to faithfully reproduce a wide variety of products:

A year later, there is a new and much more lightweight technique for doing the same thing called Low-Rank Adaptation (LoRA).

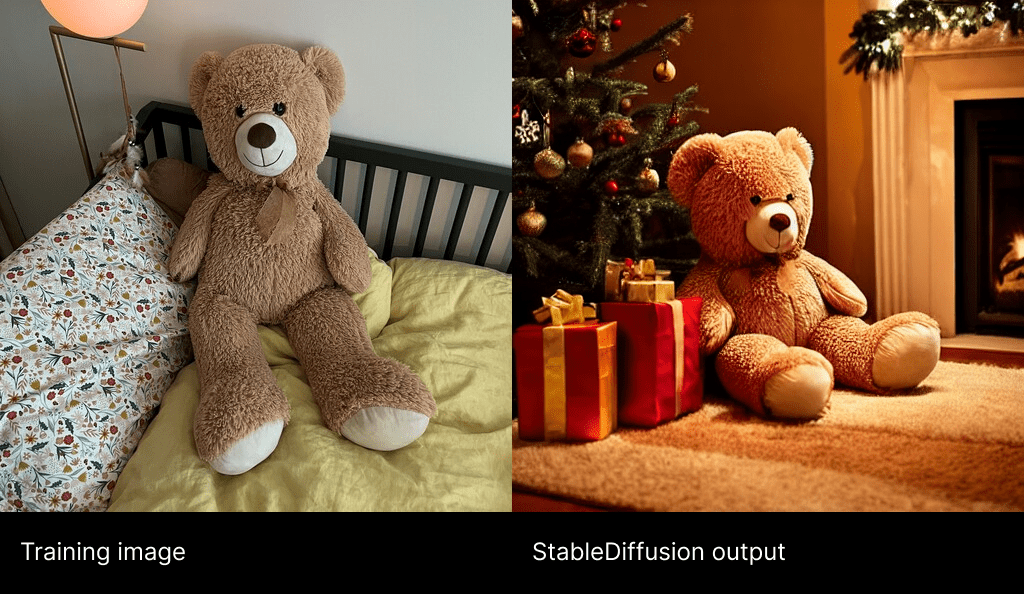

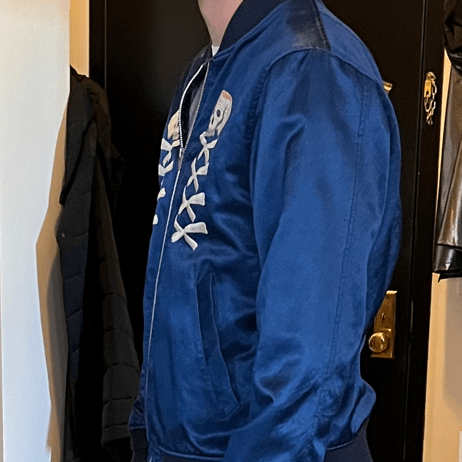

Using this open source tool we were able to take 10 images of a particular garment like these ones:

And use them to train a LoRA that could reproduce the garment quite accurately when combined with Stable Diffusion 1.5:

Training each LoRA took around 30 minutes on an NVIDIA GeForce RTX 3080 GPU. We probably could have gotten away with less images of the garment, which could reduce the training time to be between 10 and 15 minutes, and the LoRA itself is simply a .safetensors file that weights 9 megabytes.

Training models for humans

Once we were certain that we could faithfully reproduce garments, we turned our attention to humans.

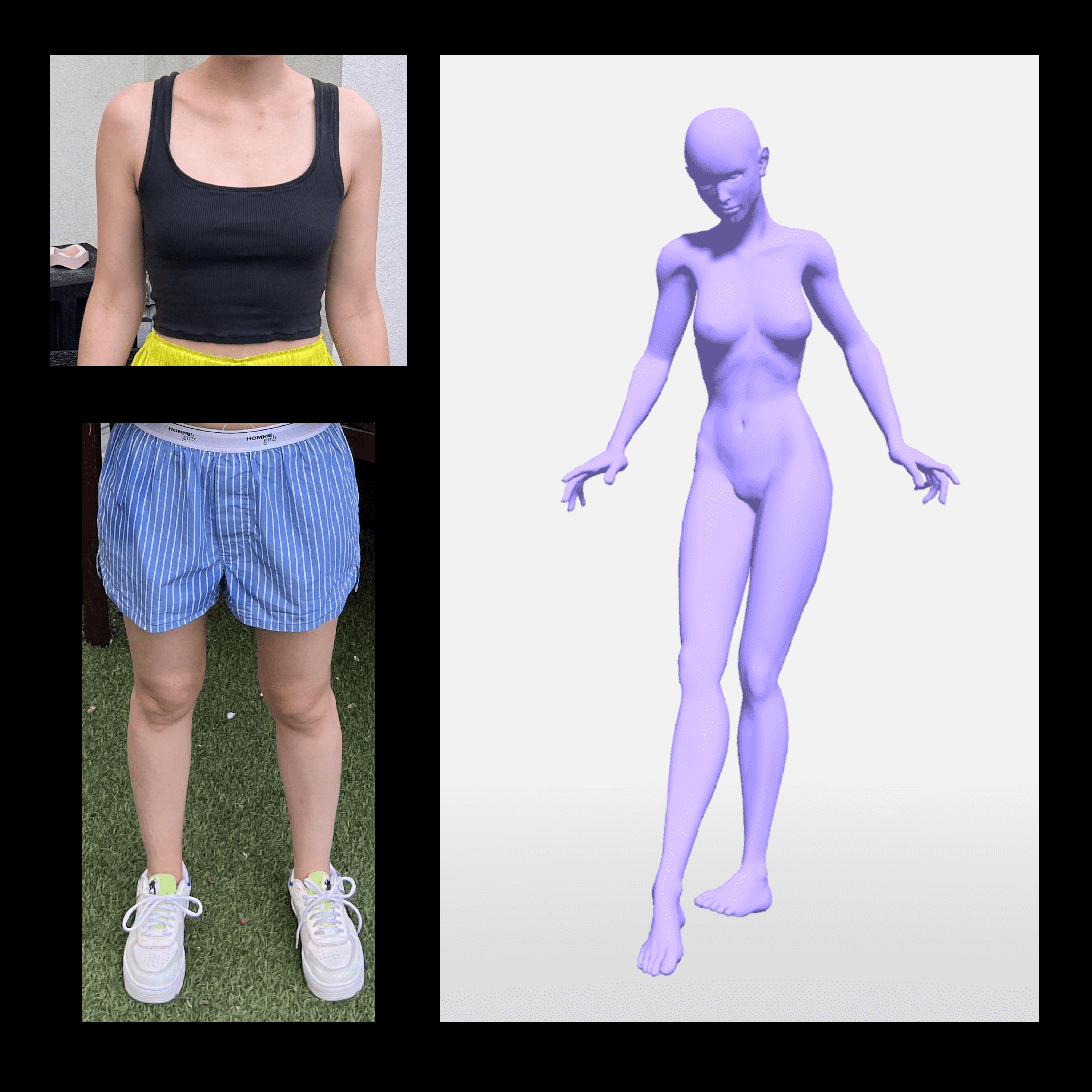

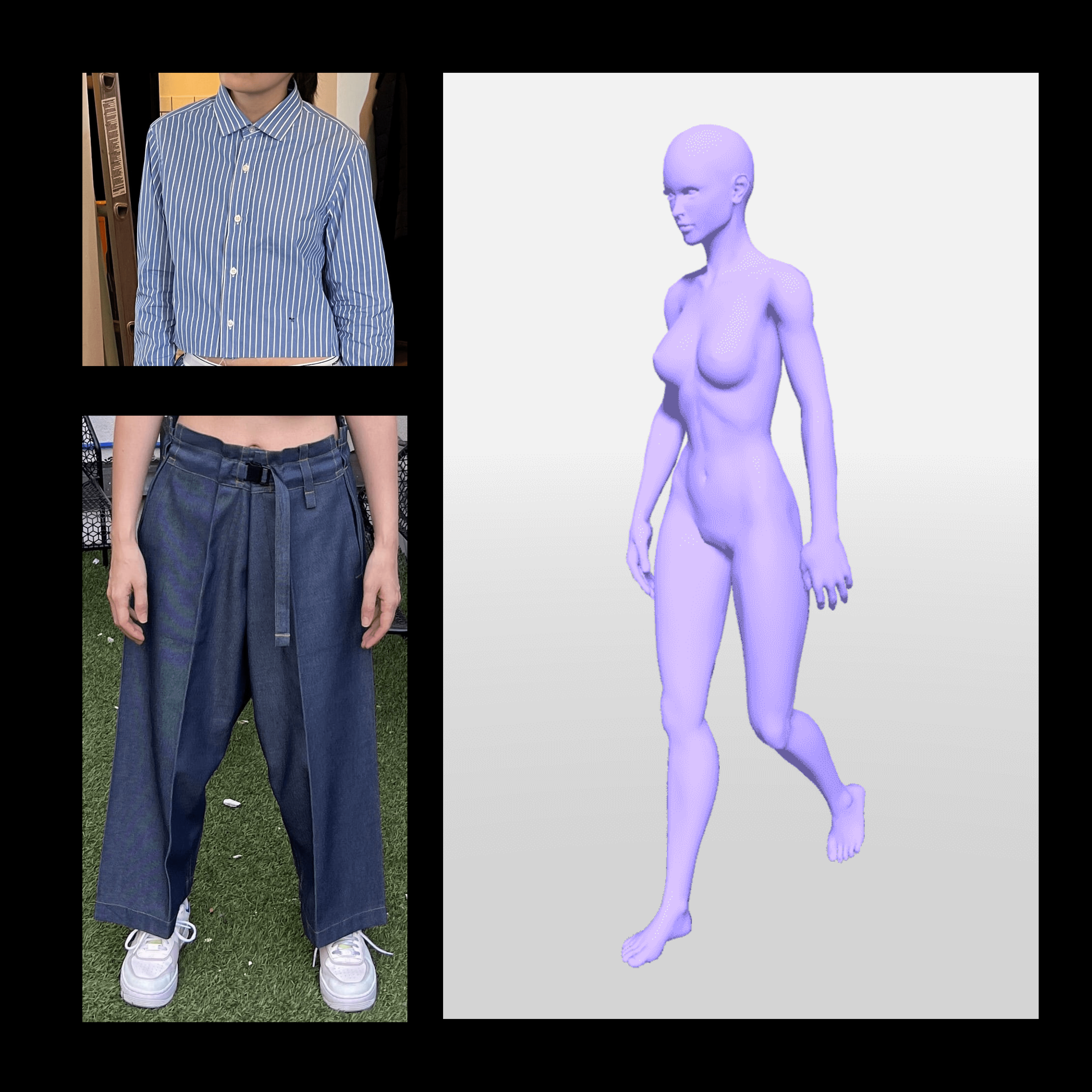

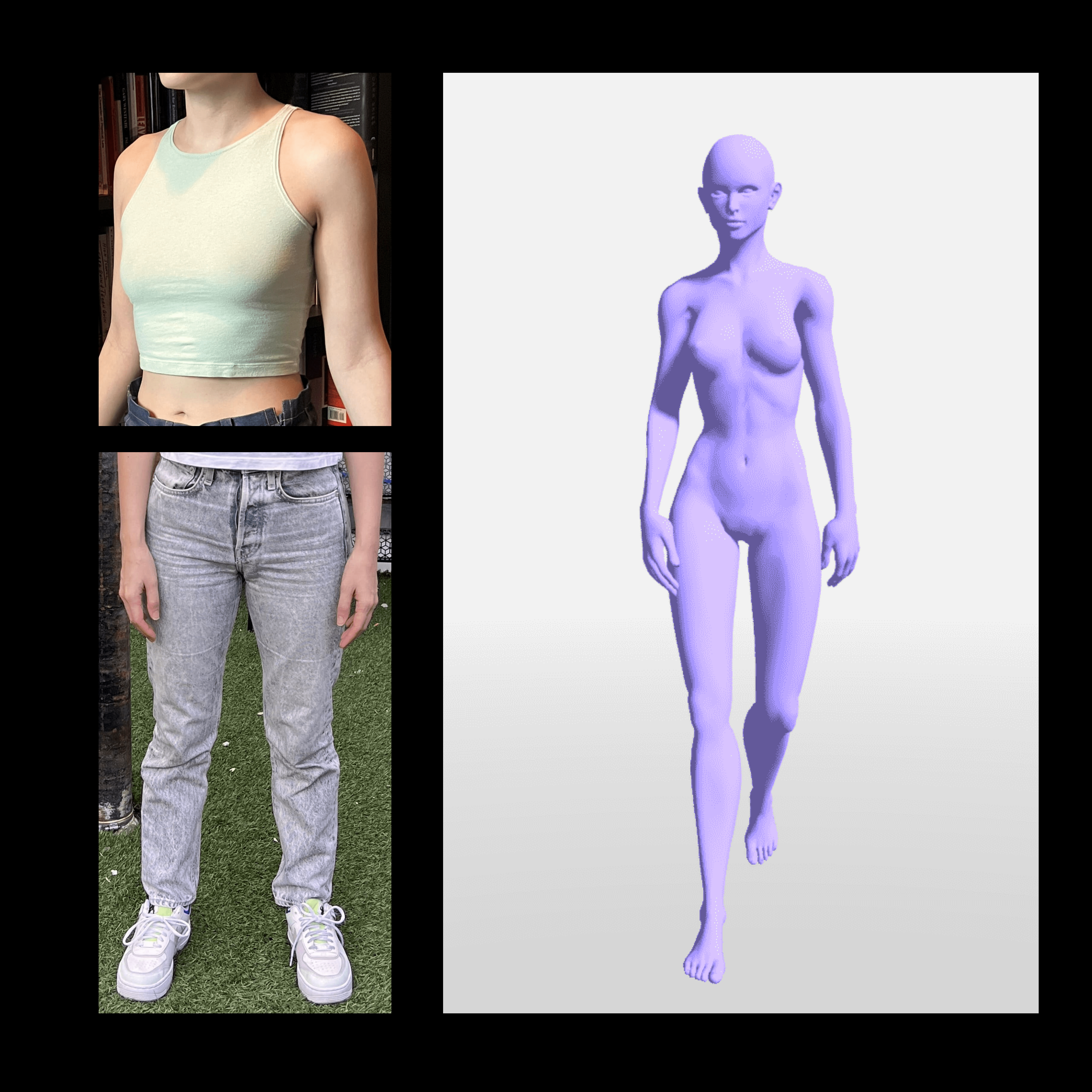

We found that it’s better to have 2 separate LoRAs for each person: one trained on full-body shots, and one trained on close-ups of their face.

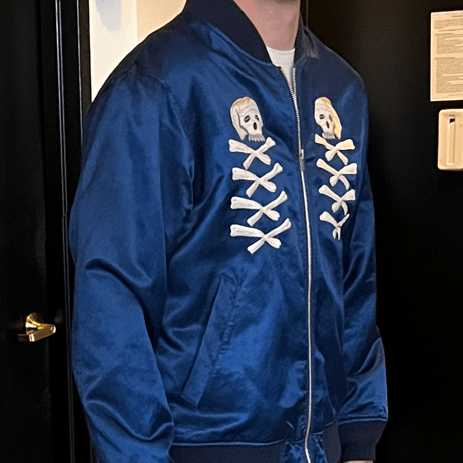

Using 30 images of a person was enough to train a LoRA that could accurately represent them, and we probably could have gotten away with less images. Here’s a comparison of a training image and a diffused one:

Inpainting outfits

We tried a number of different approaches to diffuse Jessie and Max wearing garments from their closets. The one that produced the best results is based on masking and inpainting. Here’s how it works:

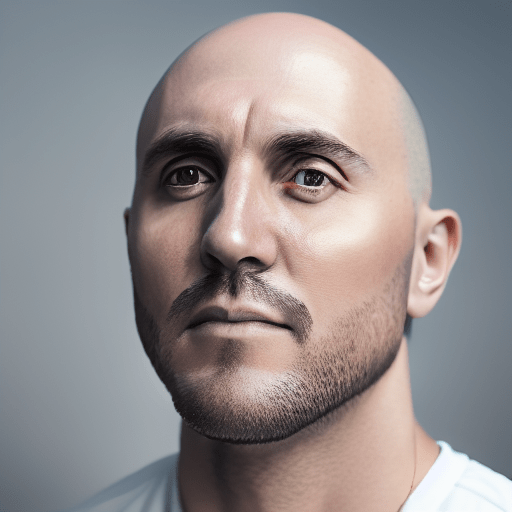

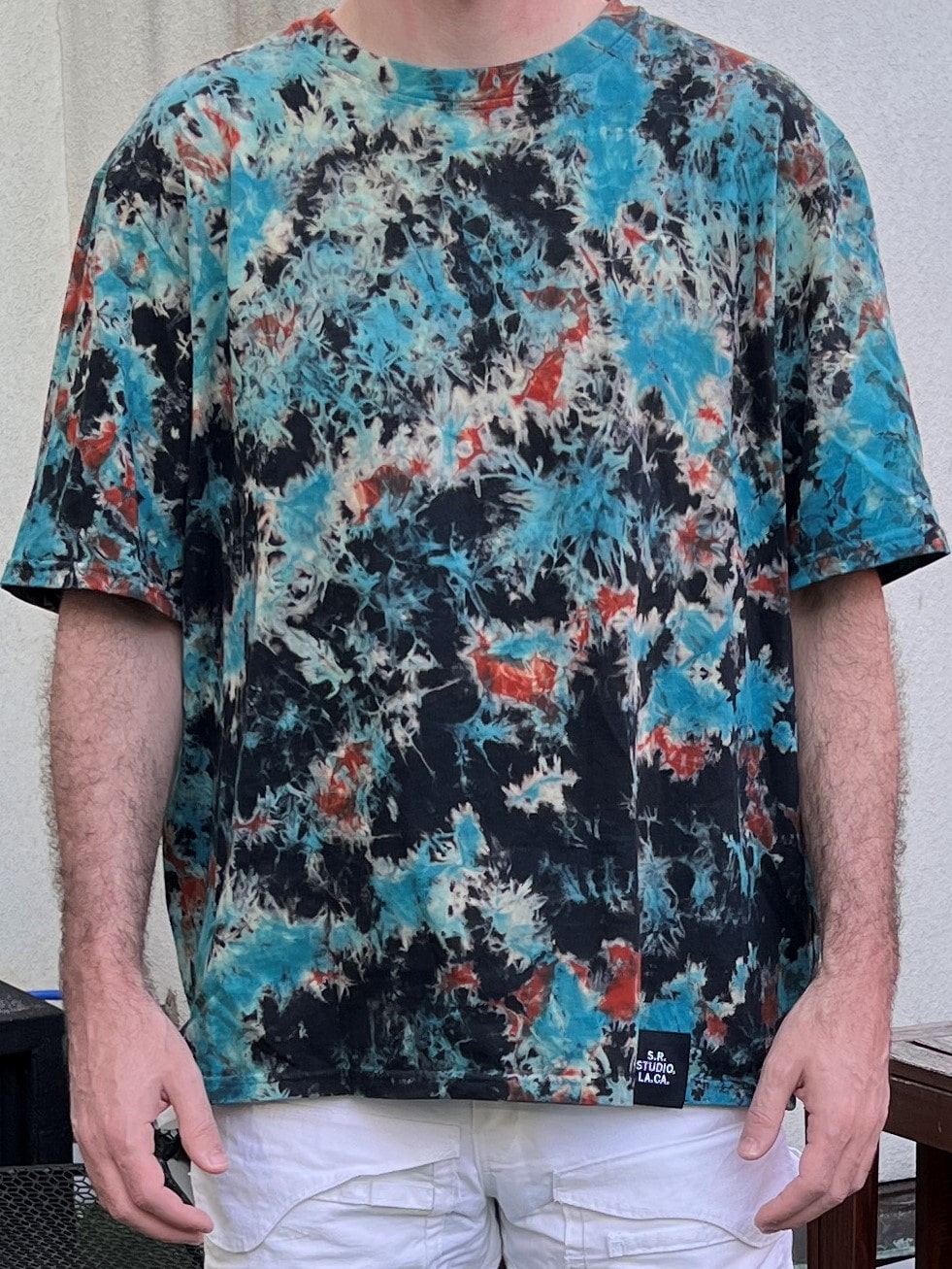

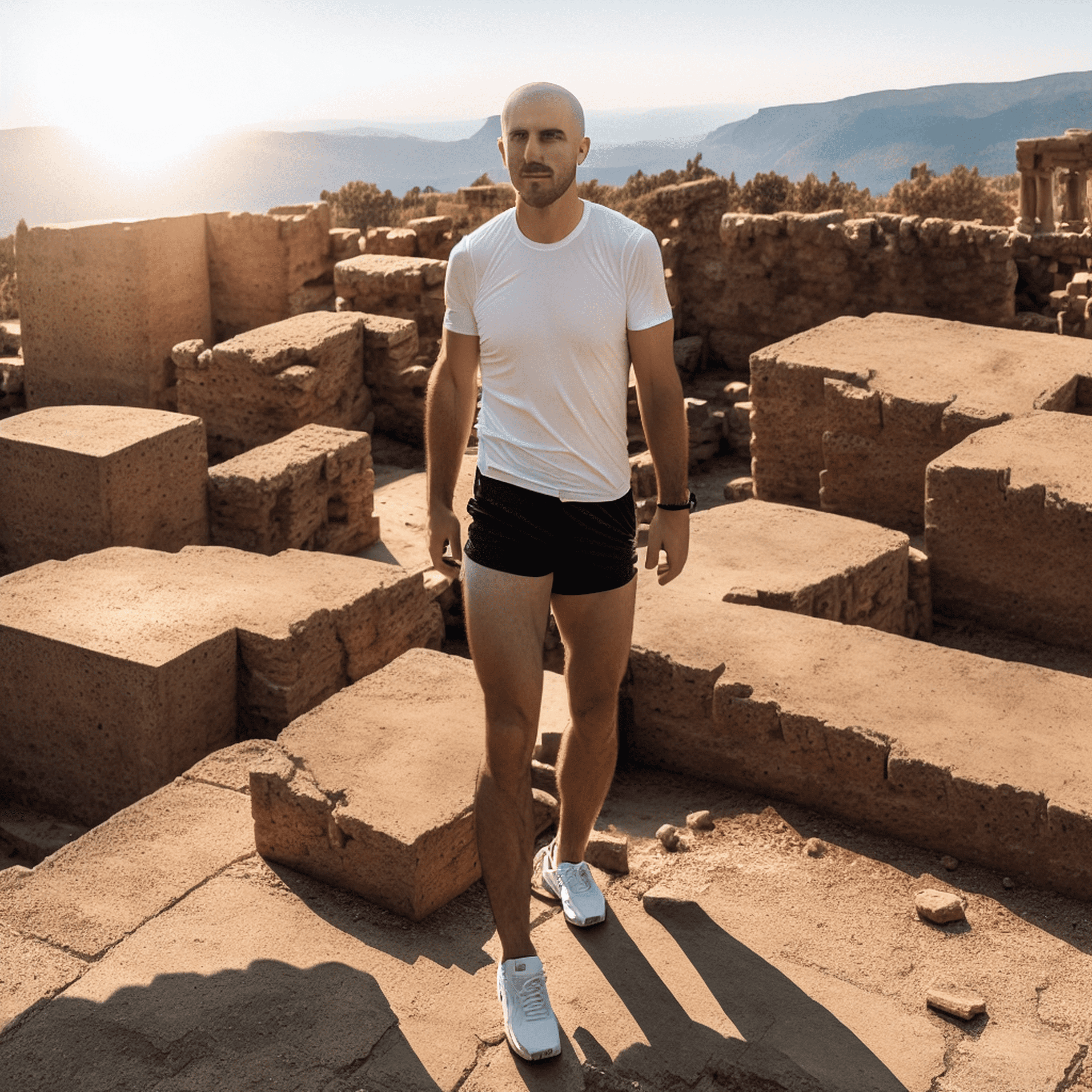

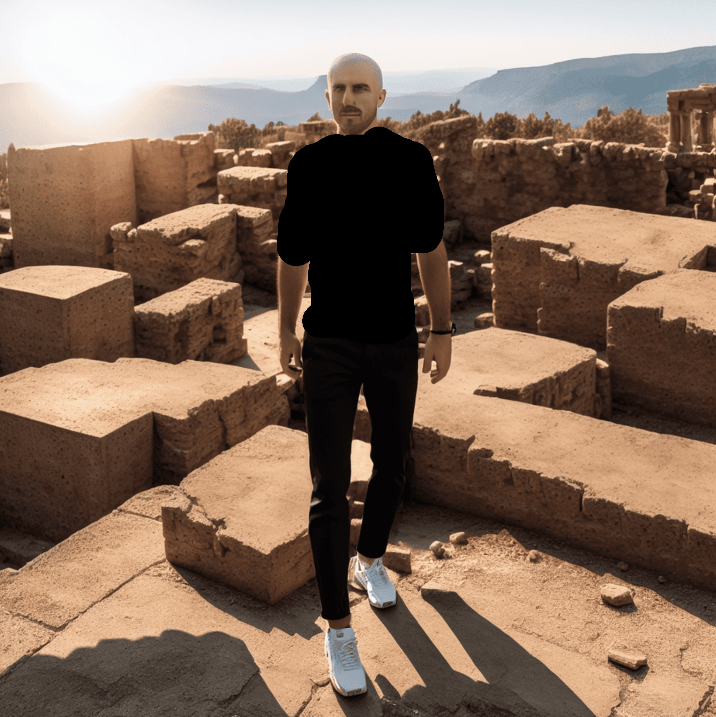

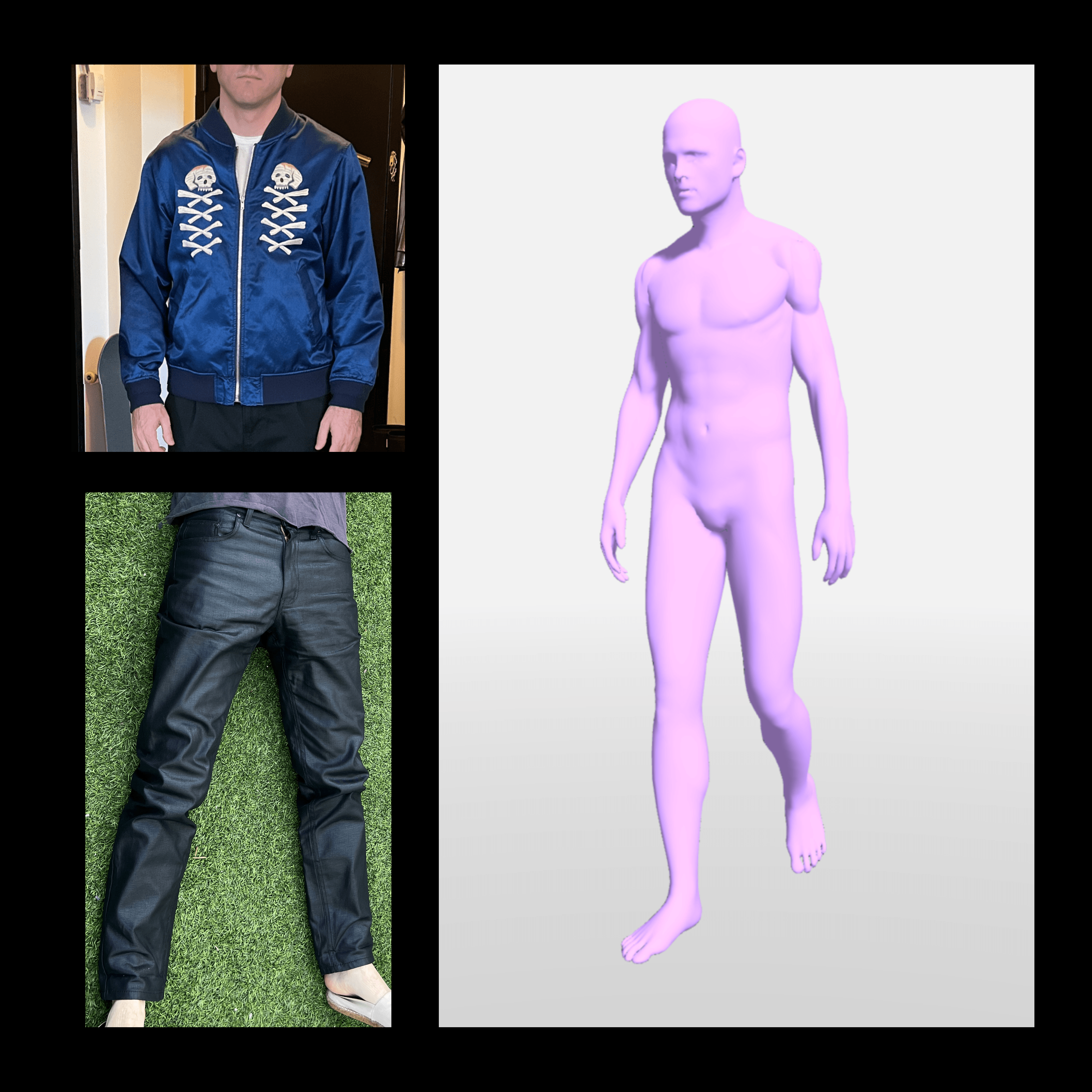

Let’s say we want to diffuse Max in this t-shirt and pants combination:

We would start out by diffusing a full-body shot of him wearing a generic outfit using his full-body LoRA.

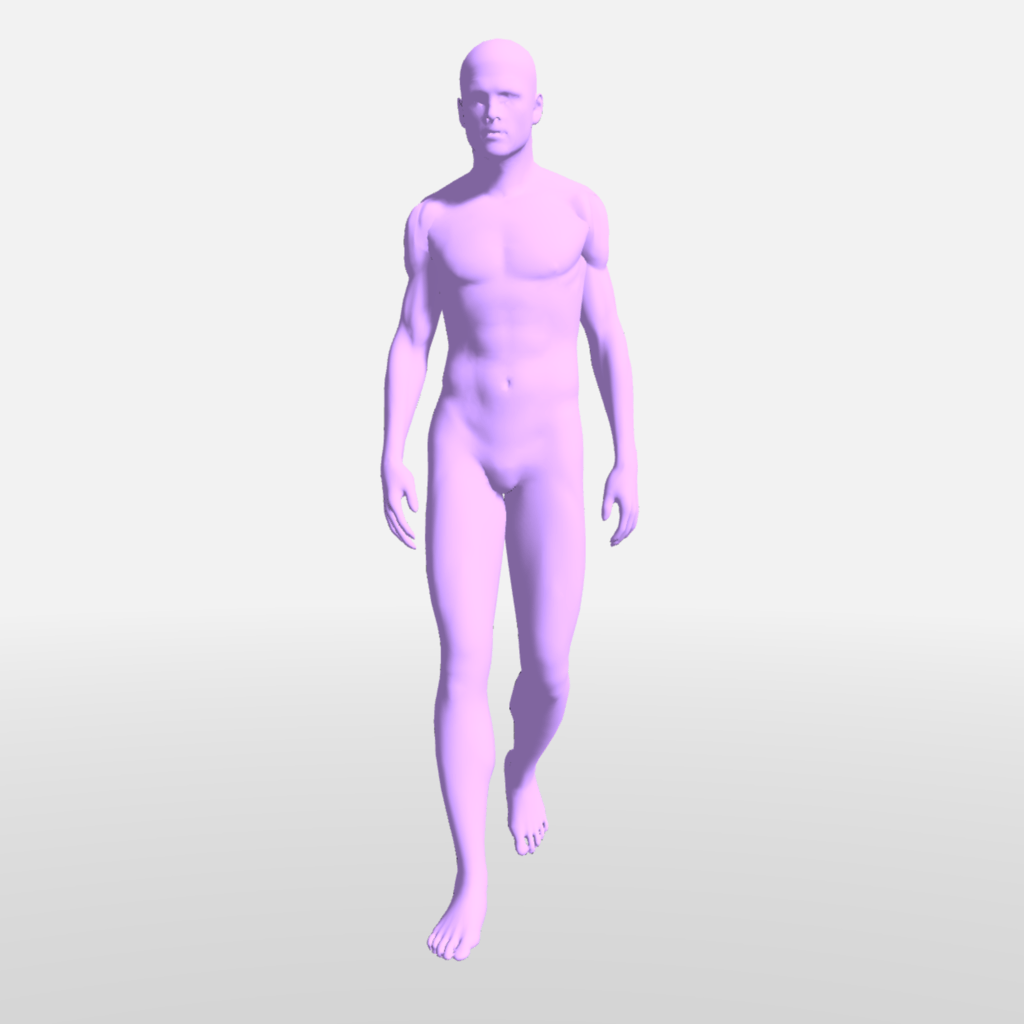

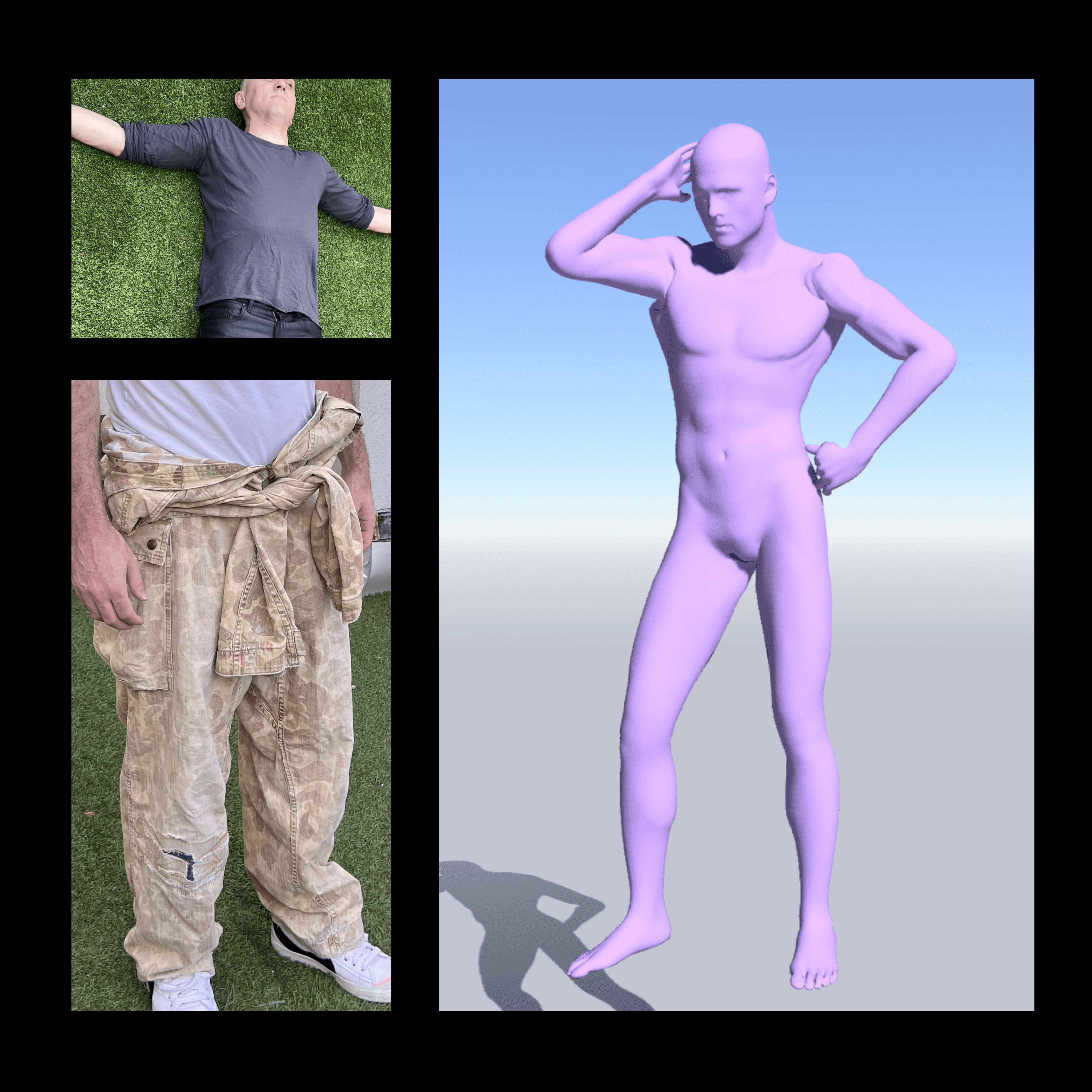

We can control the way his body is posed using ControlNet and the OpenPose model. In this example we will be feeding this image of a posed 3D character to the model:

It is at this stage in the process that we also need to define the background of the image through the prompt. For the example below we used a prompt along the lines of “Maxwell Neely-Cohen walking among Greek ruins wearing black shorts and a white t-shirt and white shoes”

Note how his face doesn’t look great. That’s because we are using his full-body LoRA. We will fix that later.

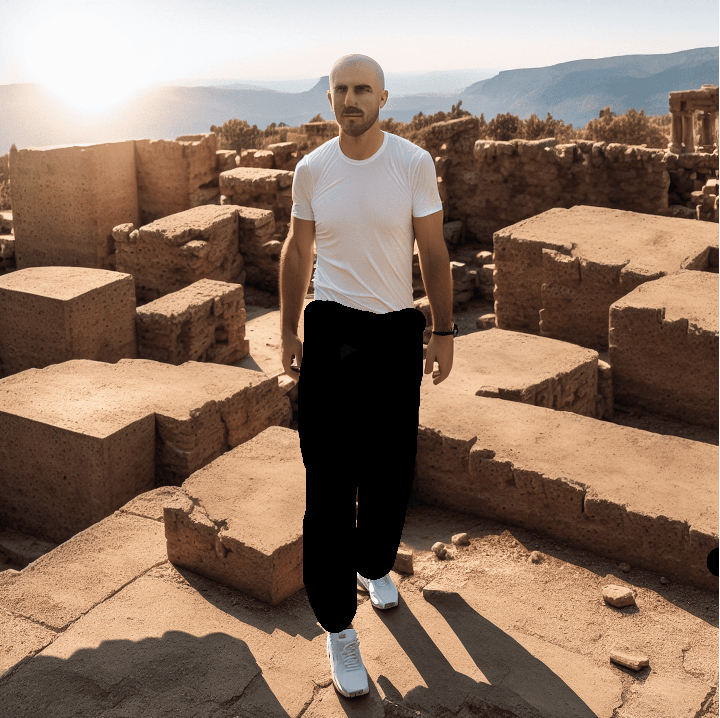

Once we have an initial image that we are happy with, we can start inpainting the clothes. For the pants we need to draw a mask around his legs:

And then use the pants LoRA to inpaint them within that mask. Our prompt can be as simple as “pants” (the keyword we used while training the LoRA), but sometimes it’s necessary to be more descriptive with prompts like “black pleated pants”.

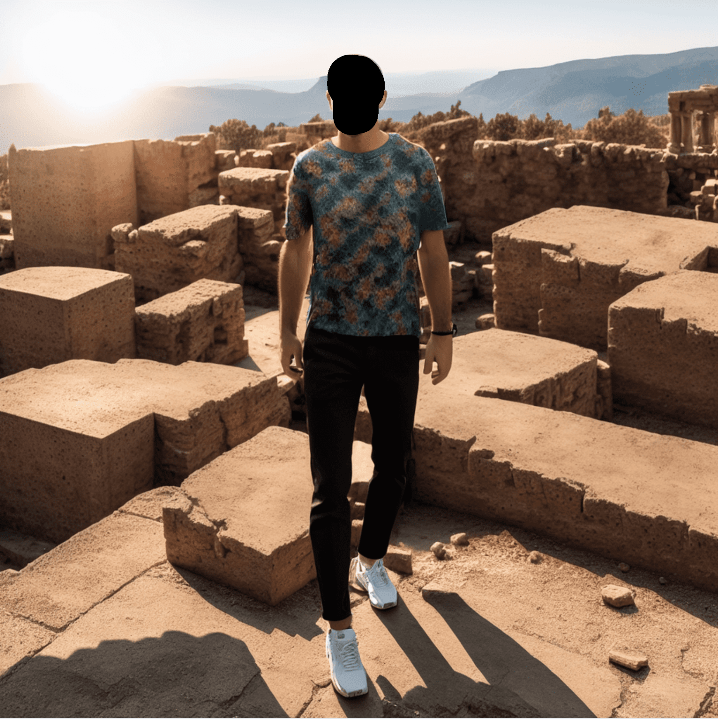

Next we repeat the same steps for the t-shirt. First we draw a mask:

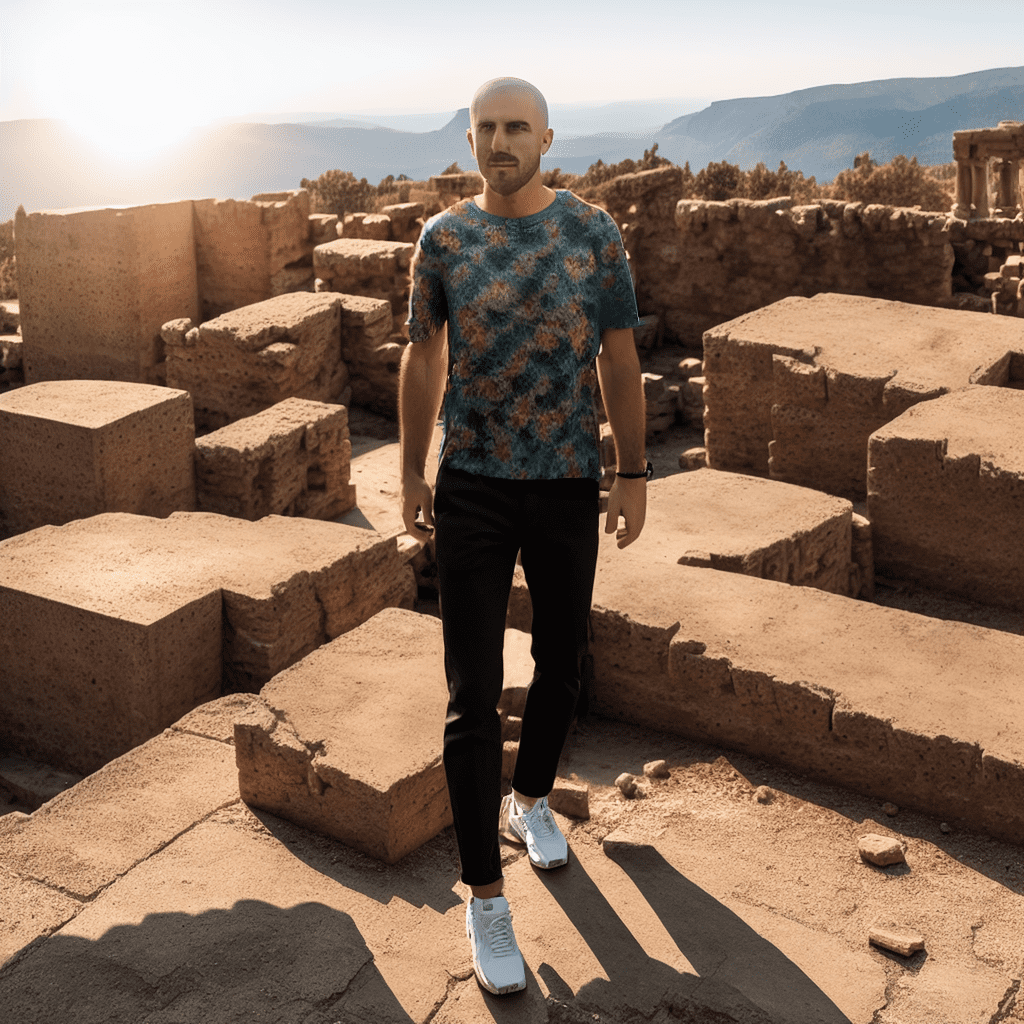

And then we use the t-shirt LoRA with a prompt like “t-shirt” or “tie dye t-shirt”:

The diffused t-shirt doesn’t exactly match the real one, but the image still tells us a lot about the vibes of the outfit, which is what we are after with the Clueless closet.

Finally, we repeat the same steps for the face. First we draw a mask:

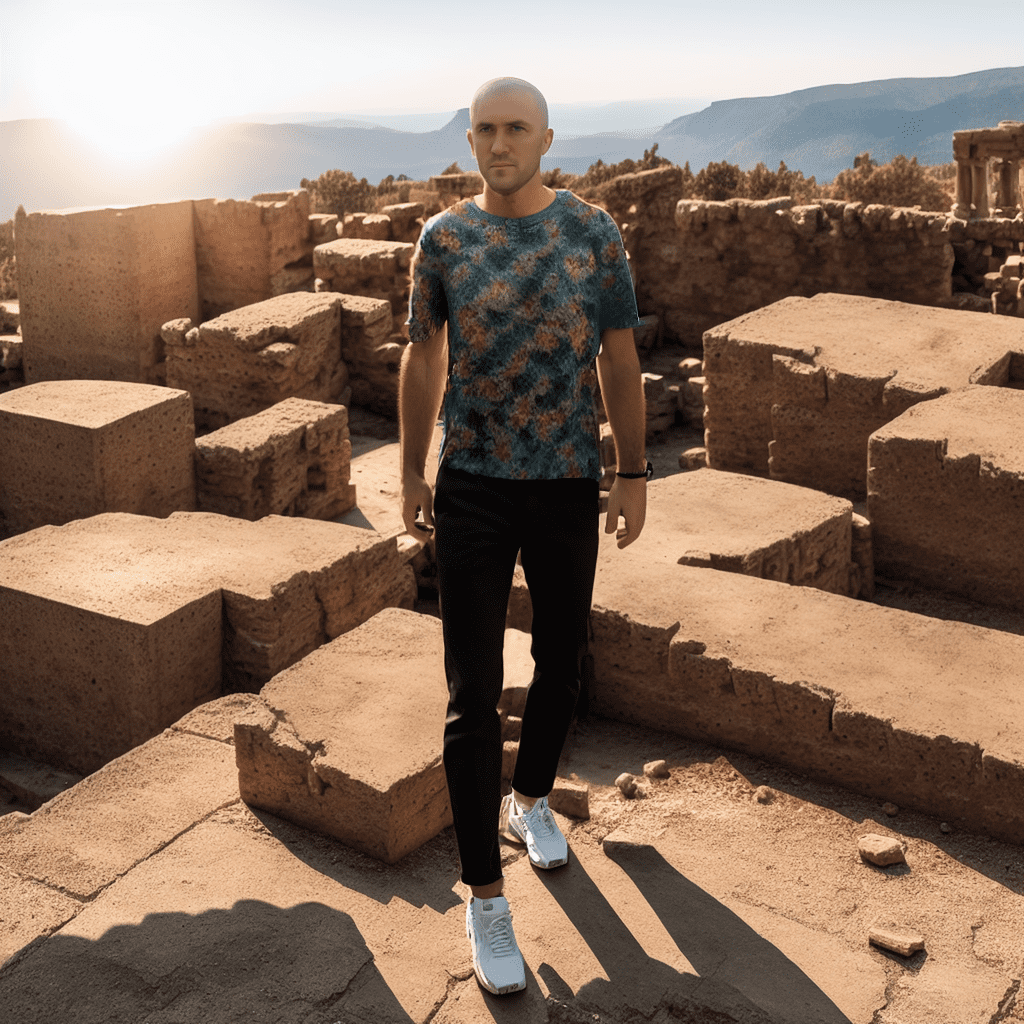

And then we use the face LoRA with a prompt like “Maxwell Neely-Cohen”:

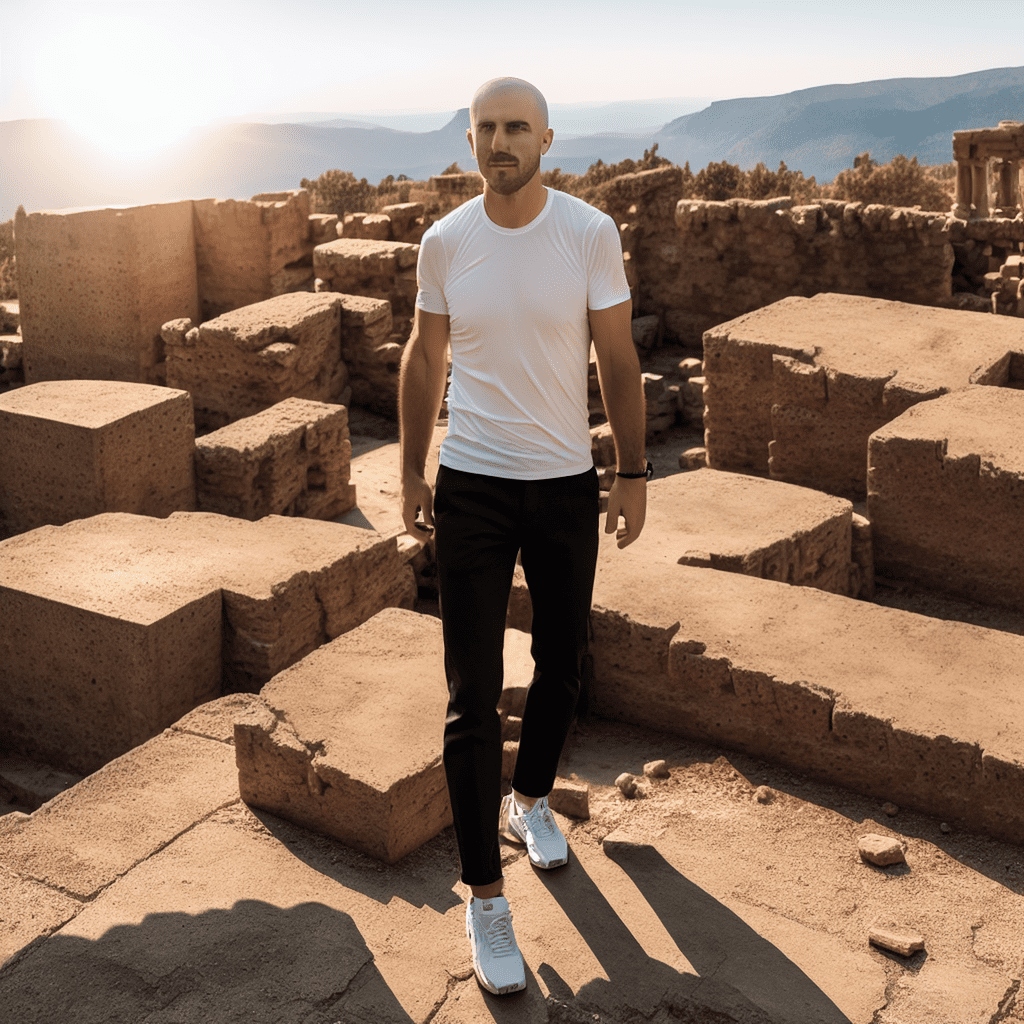

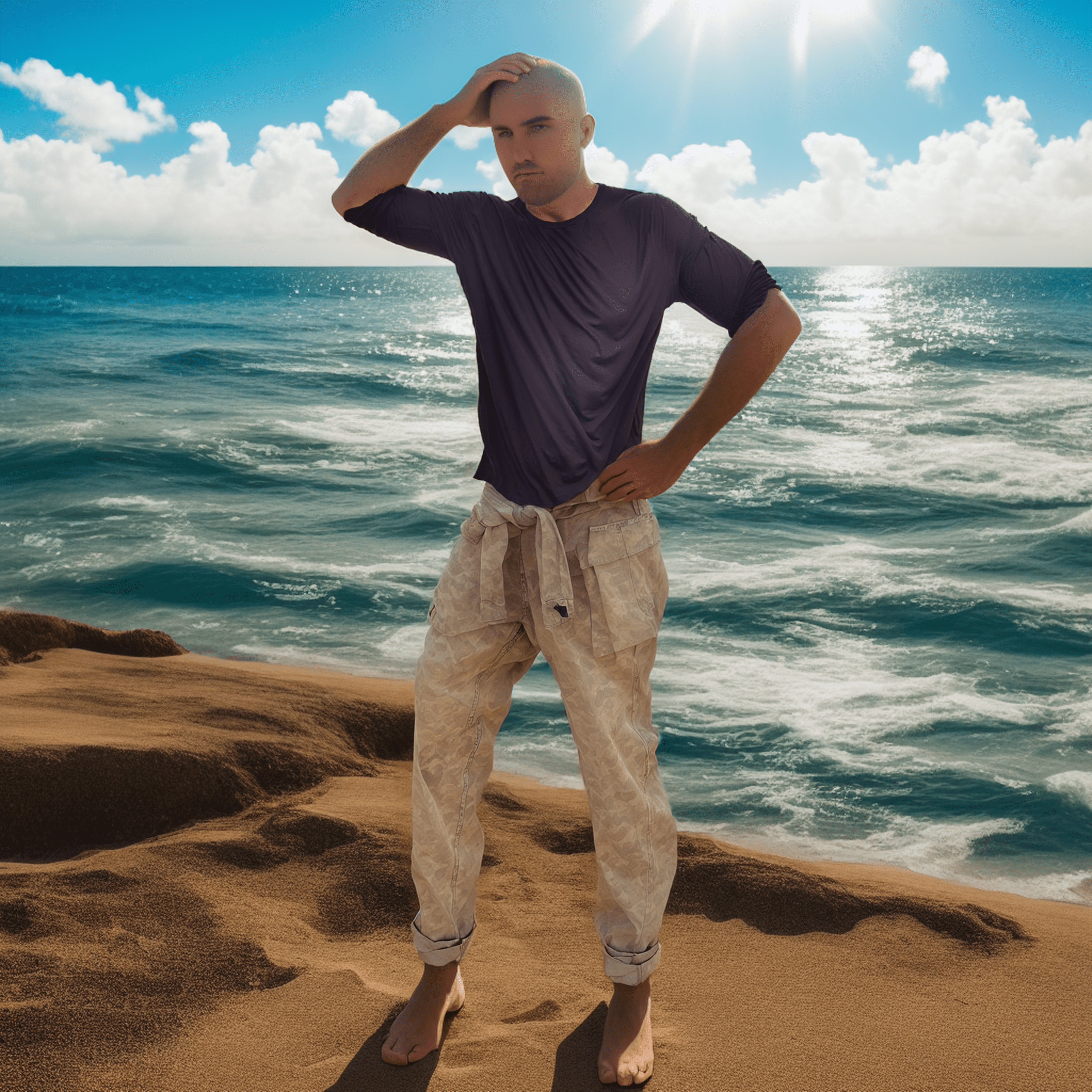

The final image is a surprisingly accurate representation of Max wearing those clothes.

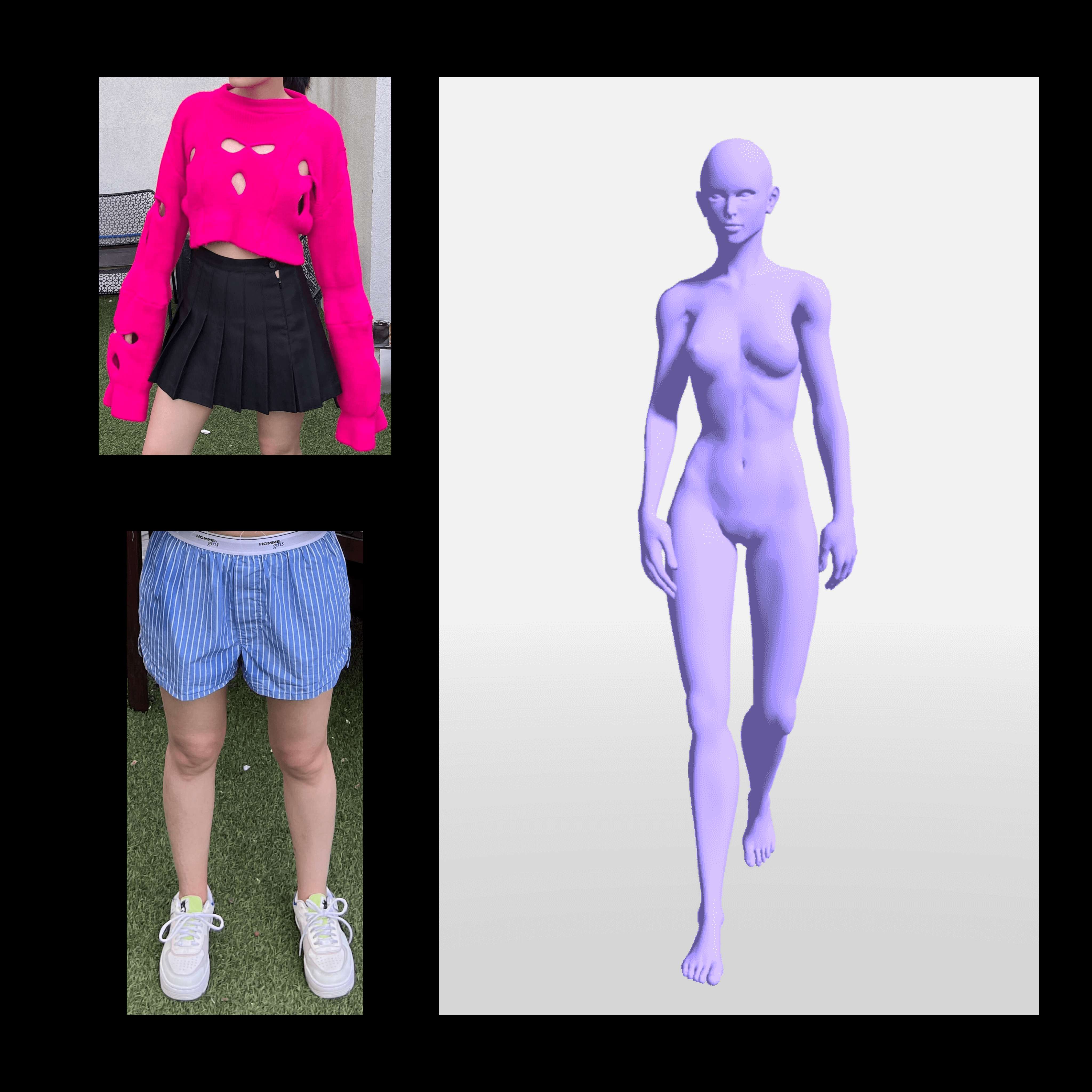

Below you will find examples of other images we diffused using this technique. Note how the color of Jessie’s hair changes frequently. That reflects the many hairstyles in the images we used to train the LoRA of her face:

One interesting thing to note in that final image is how the pant legs are rolled up. That detail isn’t present in any of the training images. The model must have rolled them up because the person is barefoot in the beach. That type of contextual understanding highlights how good these models are at fitting clothes onto human bodies.

Going beyond tops and bottoms

Once we realized how good these models are at inpainting clothes, we started wondering how far we could push them.

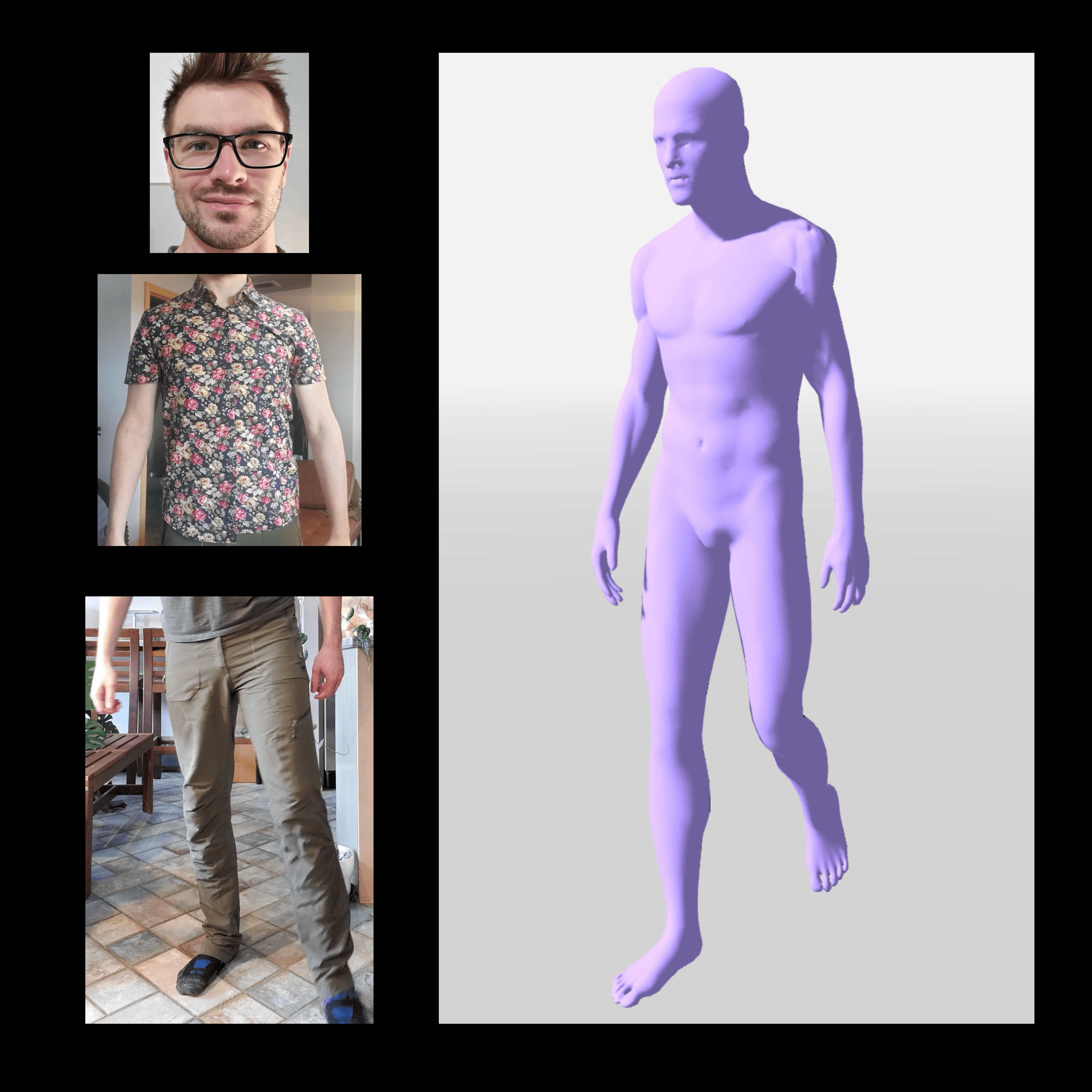

Here’s an image we diffused of the Spatial Commerce team’s very own 3D artist Brennan:

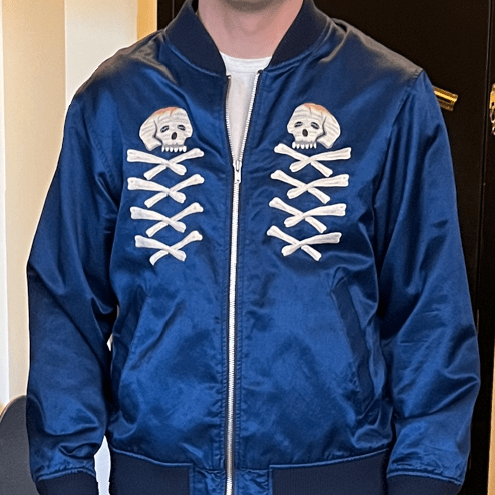

We wondered: if we drew a proper mask, could we inpaint the jacket shown below on top of the floral shirt? Could we layer clothes like that?

The answer is yes. Here is the mask we used and the resulting image:

How about adding a cap? Could we train a model of this cap and inpaint it on Brennan’s head?

The answer is yes again:

And what about shoes? Could we inpaint these shoes on Brennan’s feet?

Yes:

Even after playing with this technique for a couple of weeks, we kept discovering new things it could do. It never stopped surprising us.

Limitations

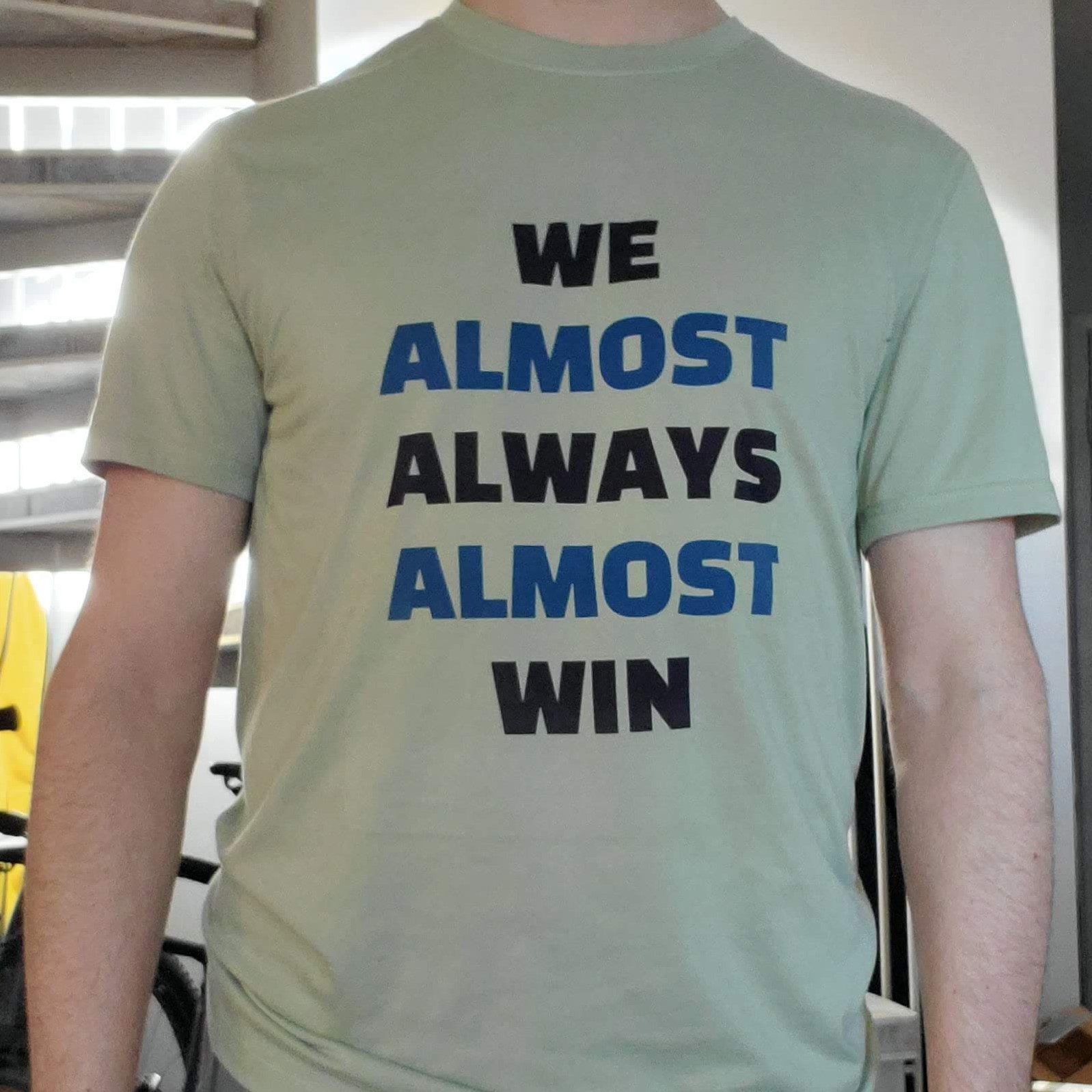

The biggest limitation of this technique is that it isn’t able to reproduce text or complex graphic designs. Too much is lost when those things go wrong, and it becomes difficult to evaluate the vibes of an outfit.

As an example, here are some t-shirts that are not well-suited for this approach right now:

Sadly, Stable Diffusion 1.5 doesn’t always win when it comes to text:

But we expect this to change soon with the release of models that can handle text like DeepFloyd IF.

Conclusions

When we set out on this project, we were hoping to make this concept video real:

The layered inpainting technique we have presented in this blog post allowed us to get really stunning results, but once we had validated it, we looked at the problem of trying to automate it.

We believe the masking step could be automated using a model like Meta’s Segment Anything, but even with proper masks of the legs and torso it can take dozens if not hundreds of image generations to achieve aesthetically pleasing results. Problems with hands, faces, feet and even backgrounds are extremely common.

Unless the quality of the diffused images becomes more consistent, an automated pipeline is likely to produce mostly poor results with occasional gems.

So for now the Clueless closet remains a piece of ’90s movie magic, but we believe the world will catch up with it really soon, and when that happens even the Spatial Commerce boys will become fashionable individuals.

Thanks to Jessie and Max for all the help they gave us during this project!