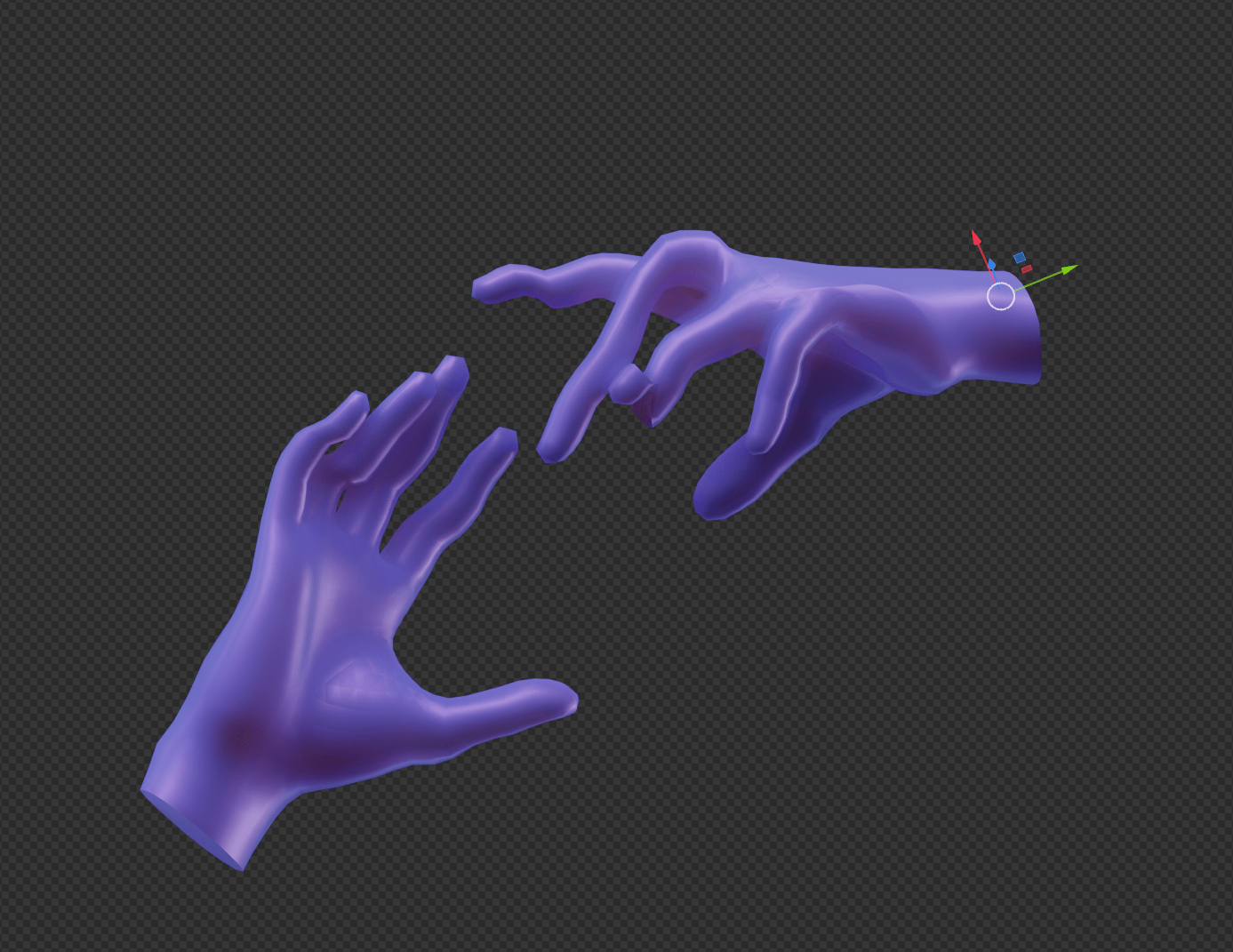

Today we wanted to share a tool we are open sourcing called Handy. This tool can be used to motion-capture your hands and head while you wear a Meta Quest headset.

You can see a live demo of data captured with Handy here.

It currently outputs Alembic files, which you can import into tools like Blender to create animations, or into game engines like Unity and Unreal.

We have used Handy internally to create concept videos like the Matrix Stockroom. We worked hard to make this tool super easy to use, so if you are an artist without a strong technical background, don’t worry, Handy is made for you.

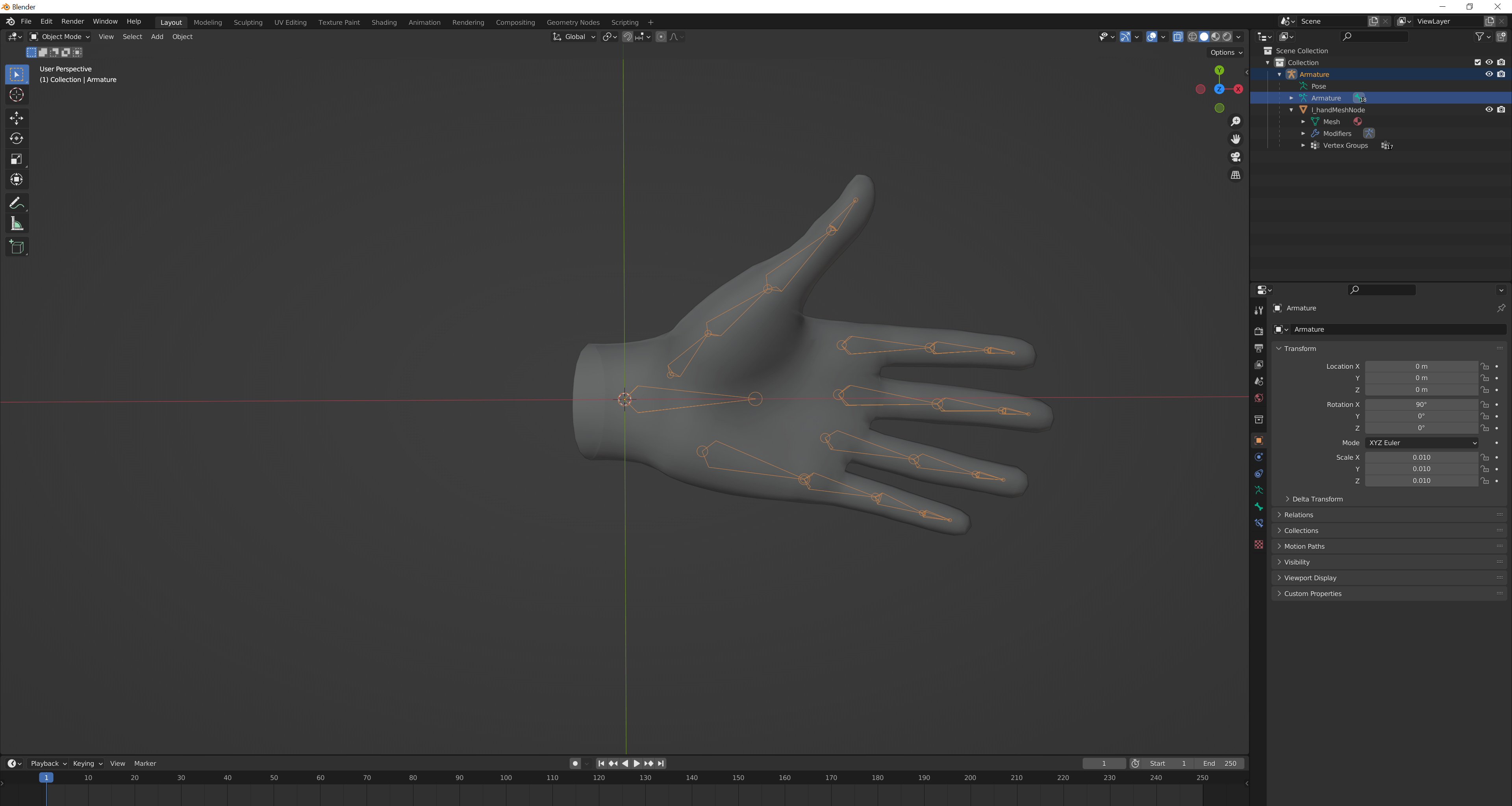

All you need to use Handy is a Meta Quest headset and Unity. The way it works is quite simple:

- When you start recording, the transforms of the joints of your hands and of your head start being saved into a JSON file.

- When you stop recording, that JSON file is wirelessly sent from your headset to the Unity editor.

- When the Unity editor receives it, it reads the transforms that it contains and replays them to generate an Alembic file.

While developing this tool we were surprised by how simple the Oculus hands are. They are just standard rigged meshes with 18 relevant bones each.

We are excited to see what you’ll do with Handy! To start using it visit its Github repository.