We have recently been exploring what online shopping will look like on a spatial internet.

Think about how you buy things online these days. You type what you want into a search bar, and then you look at dozens if not hundreds of options before choosing one.

Now the question is: what would that look like in VR?

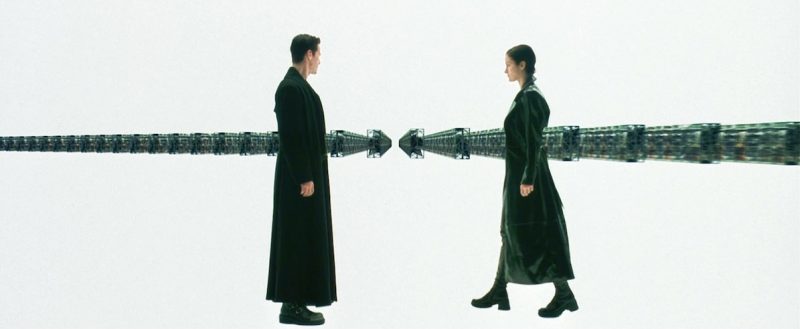

After talking about this problem for a while, one of us asked: “Do you guys remember that scene in The Matrix where Neo and Trinity are sent into an infinite stockroom?”

We all sure did. That’s the kind of scene you never forget.

Presenting an infinite array of objects to a user as a response to a search query is interesting, but how will they navigate and reduce that array to find something they like? That’s where voice commands and large language models come in. Meta already offers a fantastic Voice SDK, so this is even possible today!

We see this combination of spatial search results and voice commands as one possible way of matching the internet’s infinite scale and powerful filters.

The video below illustrates this concept and serves as our homage to the Matrix ❤️.

One cool thing to note: the hands you see in the video above were motion-captured using a Meta Quest headset and a tool we open sourced called Handy.

Animating hands by hand (no pun intended) is horrendously complicated, so we hope this tool helps you if you ever want to add hands to a concept video or cutscene.